Over the next two days HPN will look at the wash-up of the 2016 AFL Draft. Today we will look at how each individual phantom draft went, and tomorrow we will look at how each club did, and which players slid from contention.

Analysing the experts (and ammos)

In order to make the Consensus Phantom Draft we compile a bunch of drafts from a bunch of experts – this year thirteen ended up in the final cut before the draft. While the CPD is a useful barometer of each player’s draft stock before the draft, it’s also how handy to look at how reliable each expert is in their predictions.

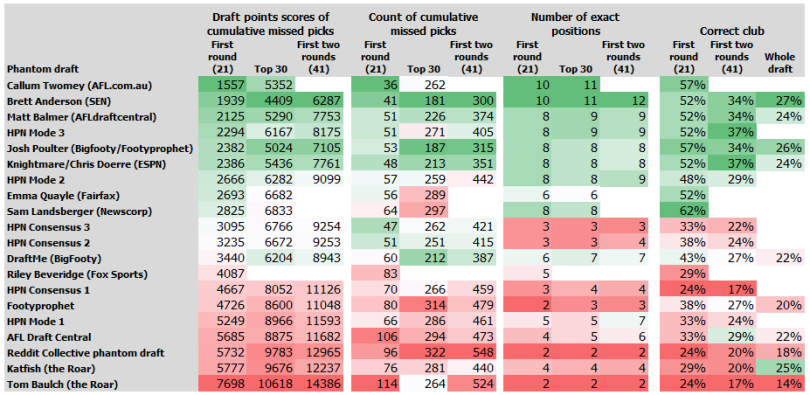

To try to analyse this, HPN have determined four measures of success:

- The number of AFL Draft Points from the actual selections on the night. This measure prioritises early correct selections, and punishes lower incorrect selections at a much lower rate (due to the logarithmic formulation of the Draft Points). Example – if I picked McLuggage at 1 rather than 3, I would lose 766 points. If I missed Willem Drew at pick 33 by one selection, I’d lose about 21 points.

- The count of cumulative missed picks. This is the raw number of picks between the phantom selection and that player’s real selection, a “closest to the pin” measure.

- The number of exact selections correct. This one looks at the exact hits, selecting players at their exact pick.

- The number of players chosen to go to the right club. This measure avoids the uncertainty of the bidding process shuffling pick numbers around, and just looks at whether the phantom draft correctly identified which players would go to which clubs.

Phantom drafts were different lengths, and a number of players were not selected in all phantoms. To account for this, if a player selected in any of the groupings below (e.g. first round) wasn’t picked up by the phantom drafter, HPN imputed a selection at either the latest position at which any phantom drafted picked them, or we slotted them in after the end of their draft (since the phantom drafter clearly rated the player below that position).

For example, only one phantom drafter picked the improbably named Quinton Narkle – placing him at pick 58. Narkle was selected by Geelong at pick 60. As a result, all the other phantom drafters were given the latter of pick 58 and the end of their own draft. So a 30-pick phantom draft like that of the professional columnists was given Narkle at 58, while a full 77-pick draft was assumed to rate him at 78.

For players who everyone missed, such as Sydney’s Darcy Cameron, we removed them from the performance ratings completely.

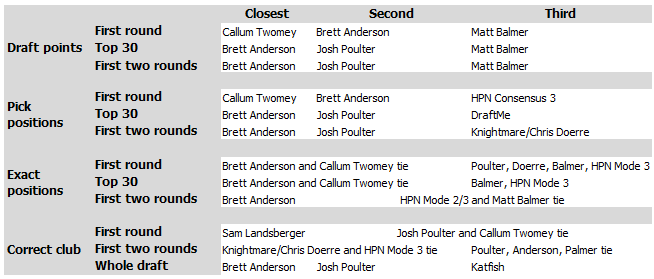

For all four measures the same handful of phantom drafters seem to be the most successful. At the top end, Callum Twomey stands out as the most successful for the first round, topping three of the four measures and finishing second in the fourth. As we look at more selections, Brett Anderson starts to take over, having had the most accurate full mock draft. The most successful “ammos” from last year, Balmer, Poulter and Doerre, continue to rate at the top end of the table, along with newly-added DraftMe at Bigfooty, whose top 30 was third-closest to the pin.

At mid-table we see two drafters representing the big two newspaper organisations, perennial champion Emma Quayle and News’ Sam Landsberger. Quayle seems to have had an off year in 2016, but this still puts her above most of the pack (and most of the professional media outlets). Sam Landsberger did particularly well with matching players to their prospective clubs, particularly across late first round picks, but struggled with other measures.

Of the professionals, Riley Beveridge struggled the most, but even he correctly identified the player to club choice a quarter of the time. For an incredibly difficult task, this is admirable.

Let’s have a look at the total leaderboard:

If HPN were forced to pick a “winner”, we’d award it to Twomey and Anderson in a photo-finish tie.

However when compared with the results from 2015, all drafters were significantly down on the accuracy of their performances 12 months ago. This perhaps speaks to the eveness of the 2016 draft, and perhaps that the clubs are beginning to play their cards closer to their chests.

Wisdom of the crowd

HPN used two different Consensus Phantom Draft models in 2016, each with their own strengths and weaknesses. First, we looked at the average consensus ranking of players, and the second looked at the mode or most frequently drafted player at each pick. As the draft drew closer both different systems of measurement became significantly more accurate. The third edition of both the mode and consensus average performed about twice as well as the first editions of each. Time, and the late publishing of the expert’s opinions, plays a critical role in honing in the accuracy of the model. Or; don’t get too excited about Player X if he’s linked to your club in early October – a lot will change in that timeframe.

The consensus mode draft got more exact matches and also did quite well matching clubs to players. The consensus average ranking draft was third closest to the pin of any phantom draft over the first round, but lost accuracy later in the draft.

Overall, we feel the mode performed a little better overall this year, which differs to the results last year. As the 2016 draft had a number of highly variable moving parts, some of which bumped players down the pecking order significantly based on the selection of other clubs, using the wisdom of the “most likely” draft worked this year. In other years, with a much clearer distinction of talent at the top end, the consensus average draft will likely perform a little better.

Tomorrow, HPN will use the gap between consensus rankings and reality to identify the much vaunted “sliders” and “bolters”, and which clubs did better on paper.